Tweets, Metadata, and Other Controversial Topics — And a New Paper

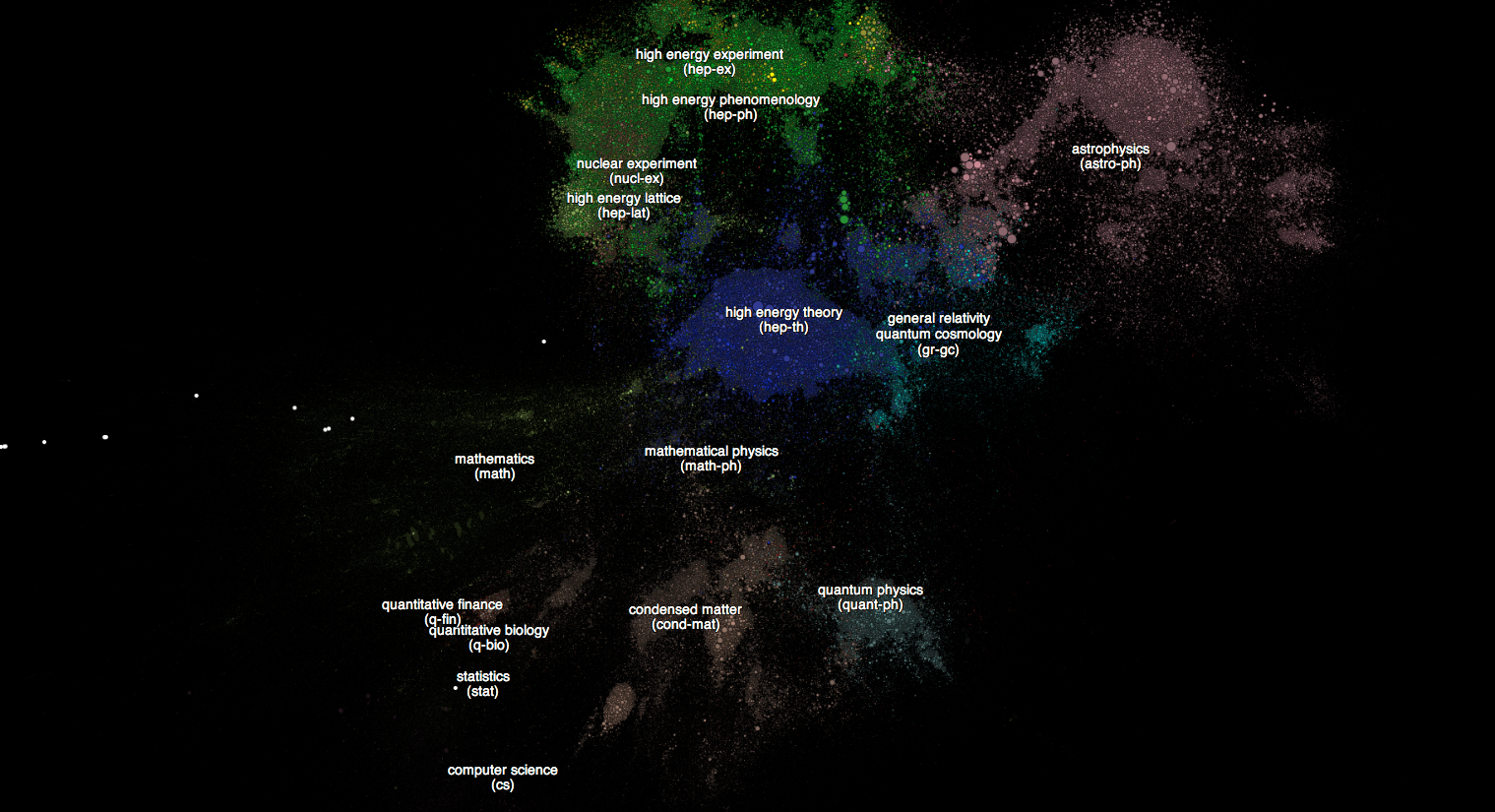

First, a pretty picture.

These clouds of points represent 866,543 papers from the arXiv, an open access scientific publishing site first created in 19911. This map of the arXiv universe is maintained by Damien George and Rob Knegjens over at paperscape. It's hot off the presses, having only been released this month. I found out about it from Sean Carroll's blog.

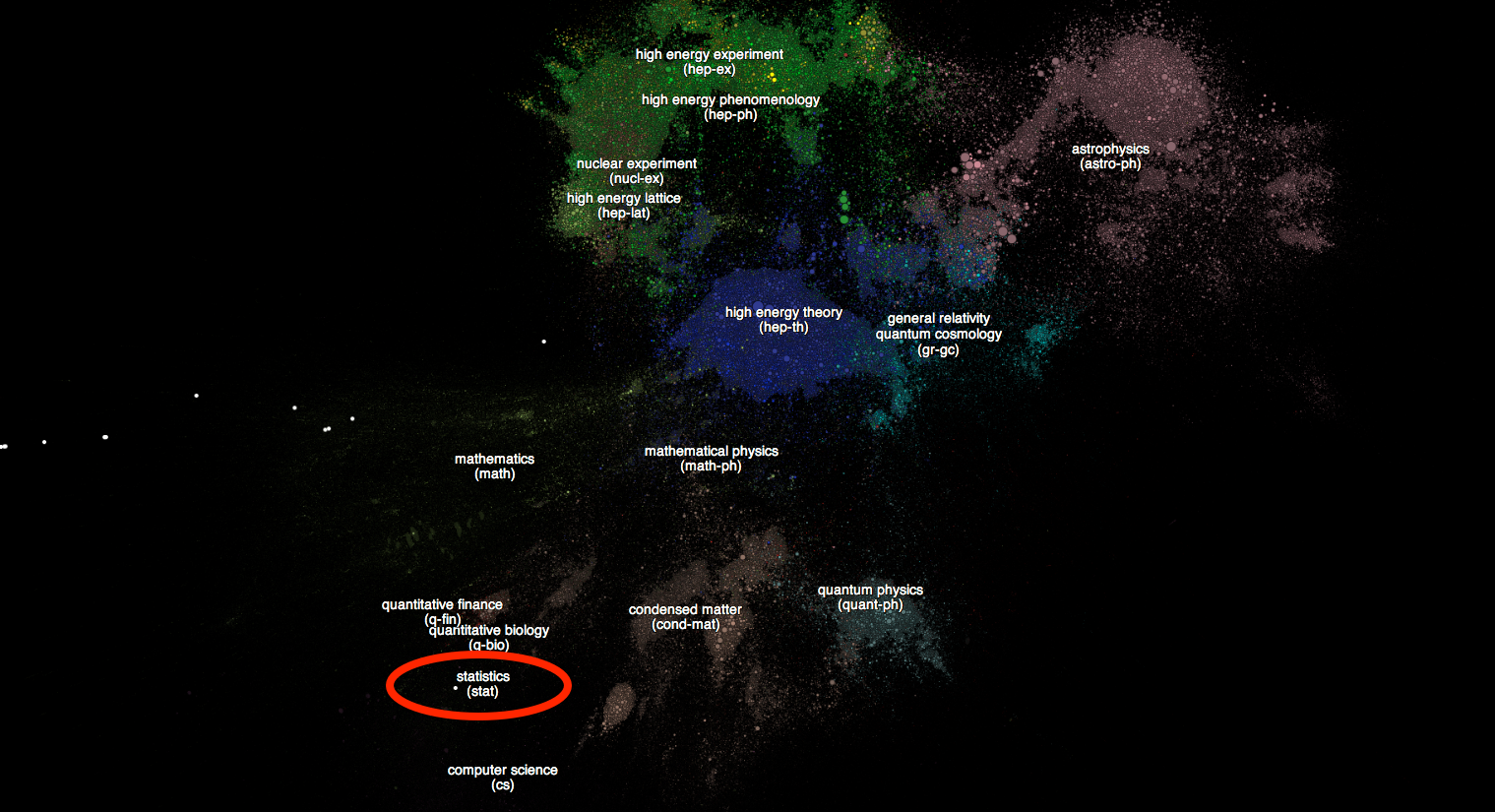

The natural first thing to do when you come across such a map is to search for oneself2. I only have a single paper housed on the arXiv, from work I did this winter on prediction in social media. That paper shows up as a lonely blip in the statistics cluster of the arXiv:

I'm happy3 to have it situated there. I'm an aspiring statistician, of the weakest sort: in the tool using, not the tool making, sense.

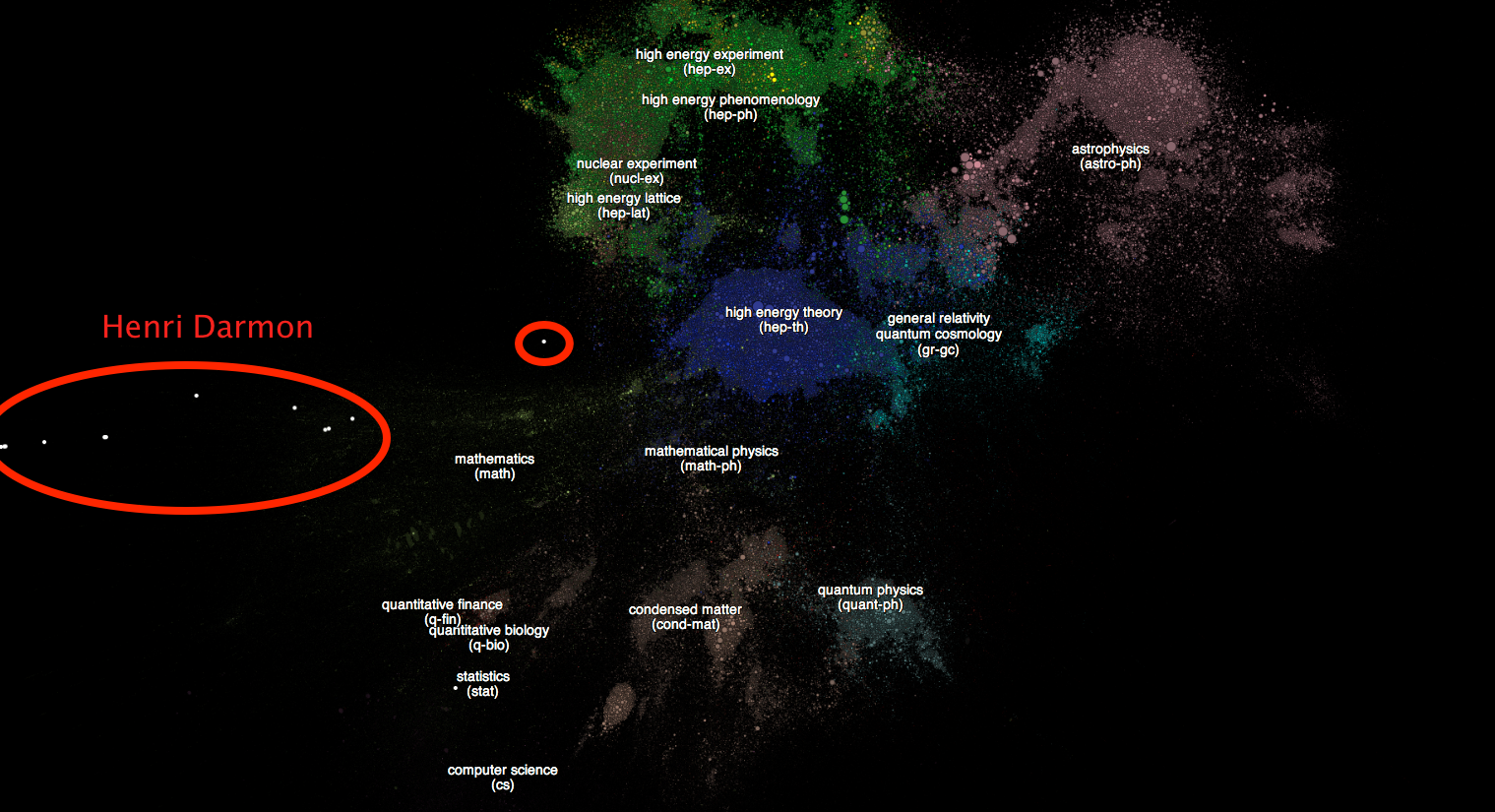

You'll notice another sprinkling of white points, in the offshoot from the math cluster. I knew those papers didn't correspond to any work I did, despite my academic home in the University of Maryland's Mathematics department. Upon closer investigation with paperscape, I determined these papers were written by or contained references to a Canadian mathematician by the name of Henri Darmon4:

Henri is a number theorist at McGill University, and according to this page, "[o]ne of the world's leading number theorists, working on Hilbert's 12th problem." One of his inventions is Darmon points, something useful enough to have symposiums about.

Since my interests don't frequently stray into number theory, I don't imagine either of us has to worry about name pollution. (Especially Henri.) I'll have to start working on my own version of Darmon *.

Clearly, physics dominates the arXiv 'galaxy.' The arXiv started as a repository for high energy physics papers. I didn't realize how comparatively few papers from other disciplines get published to the arXiv.↩

Actually, the zeroth thing I did was search for my advisor.↩

During an exit interview after an internship at Oak Ridge that lead to this paper, I told the head PI that I would never use probabilistic models again. Further evidence that we shouldn't trust our past selves to have half a clue about what our future selves will want.↩

Darmon is a relatively common French name. At least, that was the impression during a trip to Paris where a new acquaintances attempt to find me on Facebook was hindered by the prevalence of David Darmon's in France. My entire life I thought I had a rare name, but the rareness is very much geographically determined.↩