Rock, Paper, Scissors… Random!

First, watch this video. It's a talk (really more of a chat) by Jad Abumrad of Radiolab1 fame.

Done watching? Then you don't need me to tell you Jad, in part, discusses the uses of randomness in nature. Here's a favorite quote of mine:

What they basically saw is the randomness of biology guiding behavior. Now you could try and explain this randomness away, and people have. And certainly this is speculative what I just said. But essentially what they saw was that the monkey's — the latent stochastic chaos in the monkey's brain was contributing to an effective rock-paper-scissors strategy. Because you have to be unpredictable!

"You have to be unpredictable!" The line about a rock-paper-scissors strategy got me to thinking of this little game the New York Times threw together: Rock-Paper-Scissors: You vs. the Computer. Okay, they didn't invent the game. But they did think to use some statistical analysis of human rock-paper-scissors behavior to find a winning strategy. As they warn in the text:

Computers mimic human reasoning by building on simple rules and statistical averages. Test your strategy against the computer in this rock-paper-scissors game illustrating basic artificial intelligence. Choose from two different modes: novice, where the computer learns to play from scratch, and veteran, where the computer pits over 200,000 rounds of previous experience against you.

Note: A truly random game of rock-paper-scissors would result in a statistical tie with each player winning, tying and losing one-third of the time. However, people are not truly random and thus can be studied and analyzed. While this computer won't win all rounds, over time it can exploit a person's tendencies and patterns to gain an advantage over its opponent.

I'll repeat the key line, because it loops back around to Jad's comment above:

However, people are not truly random and thus can be studied and analyzed. While this computer won't win all rounds, over time it can exploit a person's tendencies and patterns to gain an advantage over its opponent.

Try playing a few rounds. I'll wait.

Back? Did you win?

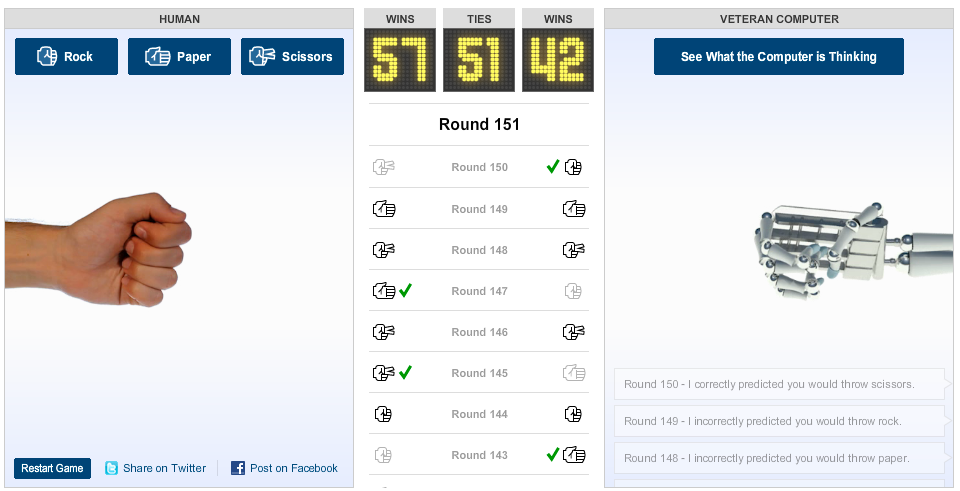

I did. And my 'strategy' was simple: be random. If you understand how the computer chooses its moves, it becomes very easy to beat. Here's one run of 150 trials with the computer on veteran:

As you can see, I won. Not by a large margin. But if I kept playing, the proportions of wins/ties/losses would stabilize, and not to (1/3, 1/3, 1/3).

Why? Because my strategy was this:

numpy.random.randint(3, size = 150)2

array([0, 2, 1, 2, 2, 1, 2, 0, 1, 2, 2, 0, 1, 2, 1, 0, 2, 1, 0, 2, 2, 0, 2, 1, 2, 0, 0, 0, 1, 1, 2, 0, 0, 0, 2, 0, 1, 2, 0, 2, 1, 2, 0, 2, 0, 0, 2, 0, 2, 0, 1, 2, 0, 2, 0, 1, 2, 2, 1, 1, 2, 0, 2, 0, 2, 0, 2, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 2, 1, 2, 1, 1, 2, 2, 1, 1, 2, 2, 1, 1, 1, 1, 2, 1, 1, 2, 2, 2, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 2, 0, 0, 2, 0, 0, 1, 2, 2, 1, 0, 0, 0, 2, 2, 2, 2, 1, 0, 0, 2, 0, 0, 1, 0, 0, 2, 2, 1, 2, 1, 2, 2, 1, 1, 2, 2, 0, 1, 0, 2, 2, 2,...

Basically, I sampled 150 draws from a uniform distribution over the values {0 (rock), 1 (paper), 2 (scissors)}. And then played those values out. Humans are bad at simulating randomness. But computers aren't. And using this strategy, you too can outperform the computer.

The New York Times explains why in their demo. As they say in the header text, 'the computer pits over 200,000 rounds of previous experience against you.' If you walk through their tutorial, they explain what this mean. Basically, they infer a Markov model of sorts. "What is the probability that Dave throws \(x\) given that in the three previous rounds, he threw \((d_1, d_2, d_3)\), and in the three previous rounds, I threw \((c_1, c_2, c_3)\)?" You can infer this probability using statistics (via maximum likelihood estimation, if they're smart), and predict my next move as the argument \(x\) that maximizes the conditional probability \(p(x | (d_1, d_2, d_3), (c_1, c_2, c_3))\). You'll infer a bunch of transition probabilities, and using those you can try to beat me.

But the computer was playing against people, not random number generators. Thus, the probabilities inferred don't agree with the new data-generating distribution (me, or really my random number generator), and so the algorithm does terribly. The learning method they use doesn't generalize to new data. Offline learning algorithms tend to do terribly with non-stationary distributions.

There are ways to get around this. A popular approach within the field of machine learning is online learning. This is aptly named, because you try to learn the data generating process as you go. This is a cool book on the topic that I'll have to add to the long list of textbooks I'd like to study.

If you'd like to play along at home, you can get out your favorite six-sided die and go to town beating the computer. It won't mind.

What's that? You don't listen to Radiolab? Shame on you. Stop reading this post and listen to one of their podcasts right now!↩

And randomness is a limited resource!↩