How to Compare Communities Using Variation of Information

Attention conservation notice: To compare communities in a network determined by differing methods, use Variation of Information, or some other statistic derived from the confusion matrix. But really, variation of information has a lot of nice properties.

Sometimes I write posts for this blog to answer questions I posed to Google without (immediate) answers1. I found myself asking such a question in mid-August.

The question: how can you compare the communities in a network determined by two (or more) different approaches?

Because I can't help myself, the basic setup is this. You have a graph \(G = (V, E)\) where \(V\) is the set containing the vertices of the graph and \(E\) is a binary operator determining whether two vertices are connected. That is, for two vertices \(u, v \in V\), we have \(uEv = 1\) if \(u\) and \(v\) are connected, and \(uEv = 0\) if they are not. These connections are often encoded in an adjacency matrix \(\mathbf{A}\) where the \((i, j)\) element of \(\mathbf{A}\) is \(a_{ij} = (\mathbf{A})_{ij} = v_{i}Ev_{j}\). (We'll assume an undirected network for now.) That is, \(\mathbf{A}\) is a matrix with binary entries that encodes the pairwise connections.

Once you have a collection of vertices \(V = \{ v_{1}, \ldots, v_{|V|}\}\), you might want to partition these nodes somehow. Usually we partition them based on the structural properties of the network, which are wrapped up in either \(E\) or \(\mathbf{A}\). For example, we might take a modularity-based approach2 and try to group together nodes so that there are more connections falling between the nodes in a particular community than from those nodes out to other communities. This approach was pioneered by Mark Newman and my advisor. We usually call the sets of nodes making up this partition communities (if we're working in some sort of social science) or modules (otherwise). The name 'community' should make sense: if we consider a social network, we expect people within a community (say, a club or organization) to have more connections to the members inside that community than without. For a prosaic example, consider the fact that at the University of Maryland, most of my friends are in the Mathematics department. (Fortunately, many of my friends in the Mathematics department don't suffer from this affliction.)

I won't focus on how to go about finding these communities. There are lots of methods out there. Which raises the question: if we use two different community detection algorithms, what is a useful way to compare how similar the communities are between the partitions each algorithm supplies? That is, we want some way to tell if we're getting basically the same communities with the two algorithms, and if so, how similar.

A person might go through and compare community by community, partition by partition, to see if the communities 'look' similar. This approach would work, but it doesn't scale well and its hard to automate. But the intuition is right. Instead of looking at individual nodes within the communities, we'll look at how much the different communities overlap in terms of all of their nodes.

Again, to be a bit more formal, suppose we have two partitions \(\mathcal{C} = \{C_{1}, \ldots, C_{|\mathcal{C}|}\}\) and \(\mathcal{C}' = \{C'_{1}, \ldots, C'_{|\mathcal{C}|}\}\) where each \(C_{i}\) is a collection of nodes. We can compute the confusion matrix \(\mathbf{N}\) from these two partitions, where \[ (\mathbf{N})_{kk'} = |\mathcal{C} \cap \mathcal{C}'|.\] In short, for a community \(k\) from partition \(\mathcal{C}\) and a community \(k'\) from partition \(\mathcal{C}'\), we count up the number of overlapping members (the number of nodes that appear in both partitions). The confusion matrix most naturally shows up in classification problems, where we have true and predicted class labels for each item in our data set, and we want to see how 'confused' our prediction algorithm got, overall. For the question we're interested in, we don't necessarily have 'true' communities (unless we do), but the confusion matrix still serves its purpose: it tells us how much overlap there is between the communities in each partition.

Once we have \(\mathbf{N}\), which will be a \(|\mathcal{C}| \times |\mathcal{C}'|\) matrix, we recognize that we have something very close to joint probability mass function that tells us the probability that a node, chosen at random from our network, lies in community \(k\) and community \(k'\). To get there, we just need to divide all of the entries in \(\mathbf{N}\) by \(|V|\), the number of nodes in our network. This does give us an (empirical) probability mass function \[p(k, k') = \frac{1}{|V|}(\mathbf{N})_{k k'}.\]

What sort of question do we want to ask using this distribution? Remember our motivating question: how different are the partitions induced by the two community detection algorithms? If they're very different, knowing what community a node is in from partition \(|\mathcal{C}|\) shouldn't tell you what community the node is in \(|\mathcal{C}|\). On the other hand, if the induced partitions are very similar, then the opposite is the case: knowing the community from one partition might completely fix the community from the other partition. (Say, if the two partitions are identical.) We're talking about knowledge and 'surprise,' so it shouldn't be too shocking that the way we'll formalize this intuition is via (what else?) information theory.

Jumping to the punchline, the quantity we're interested in is called the variation of information between two random variables \(X\) and \(Y\), and we'll denote it by \(VI[X; Y]\). For our problem, \(X \in \{ 1, 2, \ldots, |\mathcal{C}|\}\) is the community from \(\mathcal{C}\) for a node chosen at random from the network, and \(Y \in \{ 1, 2, \ldots, |\mathcal{C}'|\}\) is the community from \(\mathcal{C}'\) for a node chosen at random. Thus, we see that \(p(k, k')\) is exactly the joint distribution for \((X, Y)\), that is \[ P(X = k, Y = k') = p(k, k'),\] since we've assumed that each node is chosen with equal probability.

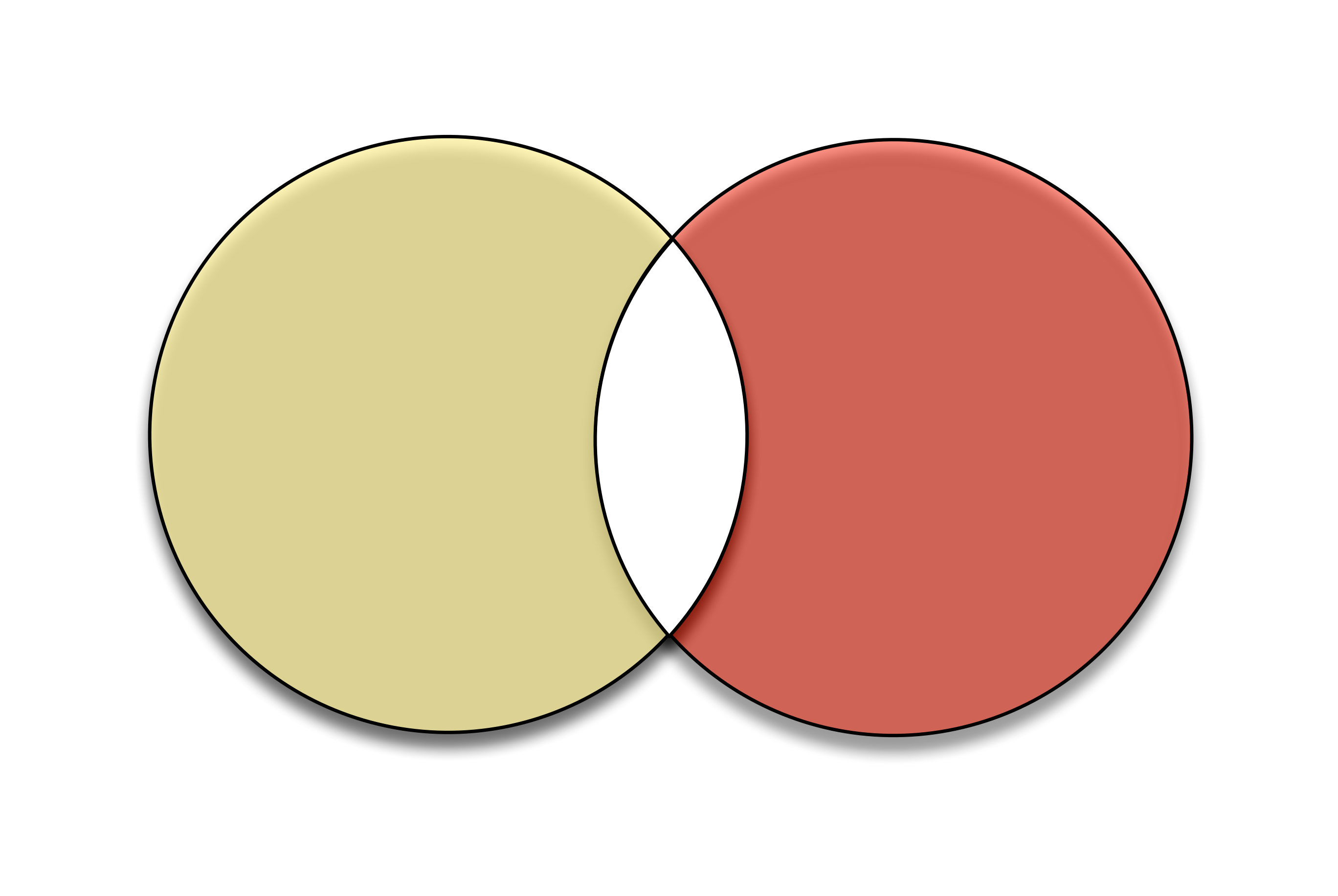

We saw how to represent the relationship between information theoretic quantities using information diagrams, a particular application of Venn diagrams. Variation of information makes up a simple portion of the information diagram: it's the non-overlapping portions of the Venn diagram,

From this, we can see that there are various equivalent definitions of variation of information, in particular \[\begin{align} VI[X; Y] &= H[X | Y] + H[Y | X] \\ &= H[X] + H[Y] - 2 I[X; Y] \\ &= H[X, Y] - I[X; Y]. \end{align}\]

Thinking about this, we see that variation of information will be maximal when \(X\) tells us nothing about \(Y\) and \(Y\) tells us nothing about \(X\) (the two circles are disjoint, so their mutual information is zero), which is precisely when the two partitions are as different as possible. Similarly, the variation of information is zero when the two circles completely overlap.

We saw that, almost by construction, variation of information matches the intuitive definition we might come up with for defining similarity between two partitions. But it also satisfies a bunch of nice mathematical properties. For example, it's a metric on the space of possible partitions, in the formal sense: it's non-negative, symmetric, and satisfies the triangle inequality. This means that it really does match our intuition of distance (since the concept of a metric is just a formalization of this intuition). Moreover, we can bound it above by \(2 \log_{2}{|V|}\), so we can normalize it between 0 and 1, everyone's favorite continuum.

Two examples: how do you compute the density of a random variable after application of a non-monotonic transformation? How is the survival function used to compute expectations? These now show up (when logged in under my Google account) on the top page. And given the traffic to my website, a lot of people find the second result (the 'Darth Vader rule') useful. I owe the internet this. I used to end up at this site every time I wanted to write a piecewise function in LaTeX.↩

Though this isn't always called for. See this paper by Aaron Clauset on the pitfalls and perils of using modularity maximization in real-world contexts. This paper should be required reading for anyone interested in community detection!↩