Parametric, Semiparametric, and Nonparametric Models

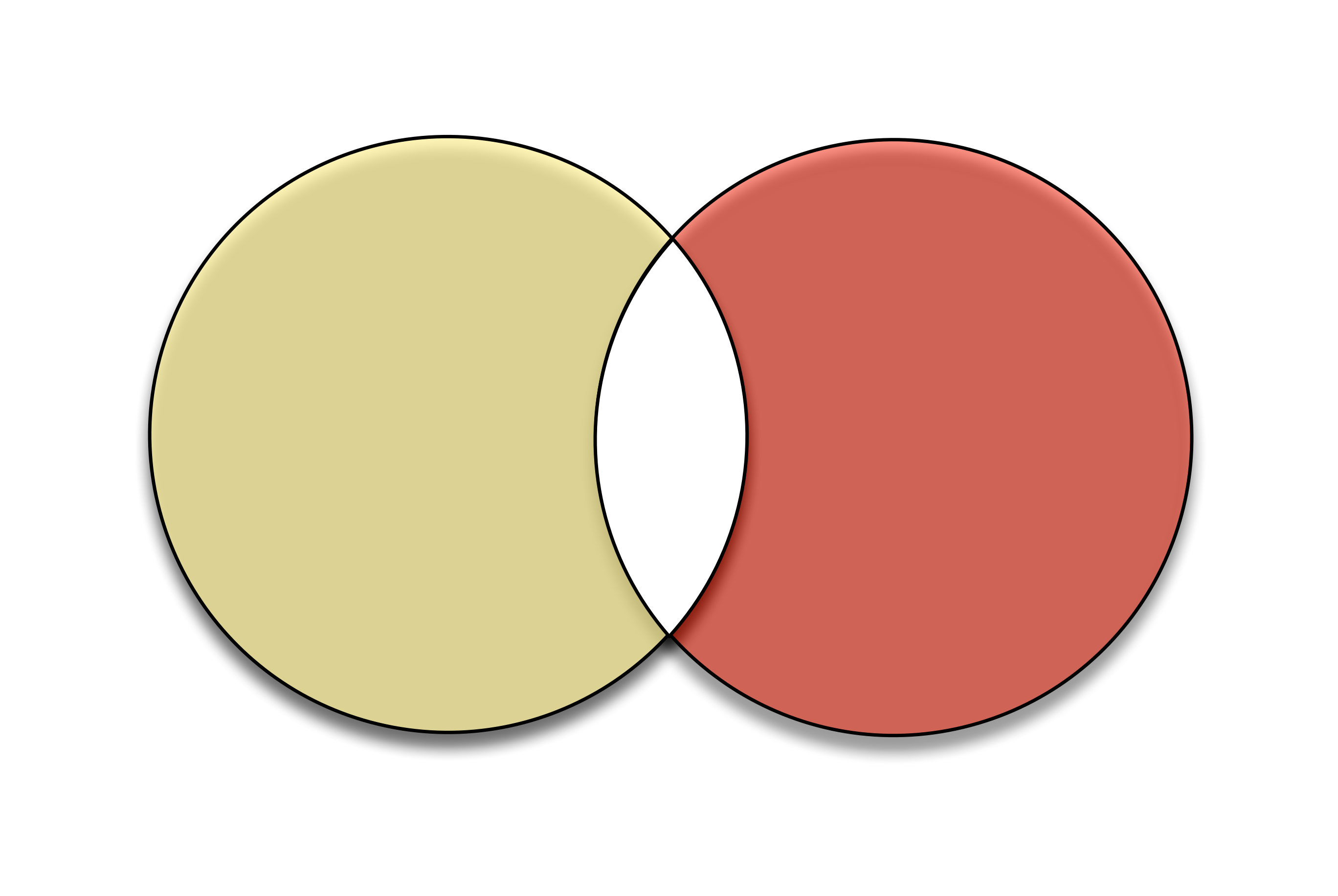

In reading the paper related to this post1, I came across a new class of statistical models I hadn't heard of before: semiparametric models.

We're all familiar with parametric models, even if we don't call them that. We introduce students to these types of models in statistics courses: the normal model, the exponential model, the Poisson model, etc.2 For example, we can specify a normal random variable by writing down its density function

\[ f_{X}(x) = \frac{1}{\sqrt{2 \pi \sigma^{2}}} \text{exp} \left[ \frac{1}{2 \sigma^{2}}(x - \mu)^{2} \right], x \in \mathbb{R}. \]

From this density function, we can compute all of the quantities we might like to about some random variable \(X\) with this density. But notice that all we need to specify the density are the mean \(\mu\) and variance \(\sigma^{2}\) (or 'location' and 'scale,' in more general terminology). We can wrap those both up into a parameter vector \(\theta = (\mu, \sigma^{2}),\) and we trivially see that this vector living in a subset of \(\mathbb{R}^{2}\). It lives in a finite space.

But if we want to, say, talk about a model that incorporates all densities with mean 0, we can't specify such a model with a single parameter. We know the mean is 0, but that's it. And that doesn't fully specify our density. Instead, we can think of the density as living in an infinite dimensional vector space.

But first, let's take a more common example to make this clear. Think about quadratic polynomials,

\[ a x^{2} + b x + c, \text{ where } (a, b, c) \in \mathbb{R}^{3}.\]

This family of quadratic polynomials exhibit the properties of a vector space (closure under addition, existence of a zero element, etc.)3. This seems obvious once we wrap up the coefficients into the triple \((a, b, c)\); in fact, the space of quadratic polynomials is isomorphic to \(\mathbb{R}^{3}.\)

The important thing to notice here is that, to specify a quadratic function, we need only specify three real numbers, \((a, b, c)\). Thus, this vector space is finite-dimensional4.

As an opposing example, consider the space of continuous functions. We know we can approximate a continuous function using polynomials of finite degree, and can even exactly recover the continuous function using a Taylor expansion. But in that case, to specify the function, we need to list out the coefficients from the Taylor expansion, \((a_{0}, a_{1}, a_{2}, \ldots),\) and for an arbitrary continuous function, we might need infinitely many of these. (Consider, for example, \(e^{x}\).) Thus, the space of continuous functions is an infinite-dimesional vector space.

In statistics, when we can't specify a model using a finite set of parameters, we call the resulting model non-parametric. This name is a little confusing. Non-parametric models often have parameters. For example, kernel density estimators have the smoothing parameter \(h\). As we saw, a better name for these types of models might be 'infinite dimensional.' But that sounds scarier.

Modern statistics has a lot of non-parametric models to choose from5. Which is good, since as I mentioned in the first note below, we usually have no reason to think nature should follow a parametric model we can write down. For density estimation, we have kernel density estimators, which do as well as we could hope. For regression, we have splines, kernels, and much more.

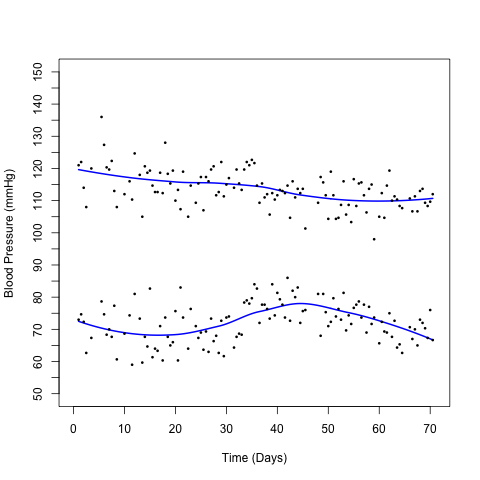

Semiparametric models lie in the grey area between parametric and non-parametric models. To specify a semiparametric model, you must specify both a finite-dimensional vector of parameters, and an infinite-dimensional function. As a simple example, consider a regression model

\[ Y = \boldsymbol{\beta}^{T} \mathbf{X} + g(Z) + \epsilon.\]

The first bit, \(\boldsymbol{\beta}^{T} \mathbf{X}\) should be familiar from linear regression: we have covariates / features \(\mathbf{X}\) and a parameter vector \(\boldsymbol{\beta}\). \(\boldsymbol{\beta}\) is the finite-dimensional part of the model. The second bit, \(g(Z)\), is an arbitrary function, and makes up the infinite-dimensional part of the model. Of course, we know neither \(\boldsymbol{\beta}\) nor \(g\), and must infer them from data.

Something I hope to write about in a bit, since it's related to some reading I've been doing about sampling with networks.↩

It's worth asking the question: why should nature follow a simple model that 18th and 19th (and 20th) century mathematicians, statisticians, and physicists could write down? Sometimes, we have good reasons, both theoretical and physical, to assume a certain model. Often, we don't.↩

Most people learn about vector spaces for the first time in linear algebra. The thing that frequently catches them off guard is that things like the quadratic are non-linear polynomials. But our attention is now on the coefficients \((a_{n}, \ldots, a_{0})\), not the variable \(x\). The polynomials are linear in the coefficients.↩

It's technically finite because it has a finite set of basis vectors.↩

From my very limited vantage point, the two main contributions of modern statistics have been non-parametric methods and methods for dealing with high-dimensional inference.↩

This looks very much like a (biased) random walk, and for good reason: if we take the logarithm of a multiplicative process, it becomes an additive process, and we in fact find ourselves with a random walk. This explains the use of logarithms throughout. Logarithms also makes the computer much happier. If we shaded the lines in by the number of sample paths that traverse them, we would see that most people follow along the central trajectory, with less and less people following along the further out branches. (We see this explicitly in the most extreme trajectories, which don't get traced out with only 10000 sample paths.) We can of course compute the proportion of walks that end up at node, first for \(t = 1\)

This looks very much like a (biased) random walk, and for good reason: if we take the logarithm of a multiplicative process, it becomes an additive process, and we in fact find ourselves with a random walk. This explains the use of logarithms throughout. Logarithms also makes the computer much happier. If we shaded the lines in by the number of sample paths that traverse them, we would see that most people follow along the central trajectory, with less and less people following along the further out branches. (We see this explicitly in the most extreme trajectories, which don't get traced out with only 10000 sample paths.) We can of course compute the proportion of walks that end up at node, first for \(t = 1\)