Pitfalls of Estimating Information Theoretic Quantities from Continuous Data

Information theory is a beautiful tool for dealing with problems arising in probability theory and stochastic processes. Many would like to bring this tool 'kicking and screaming' into the real world, to uncover structure otherwise unseen by other methods.

This makes complete sense. And is something I've done on more than one occasion. But a bit of care must be taken, to make sure we're not computing nonsense.

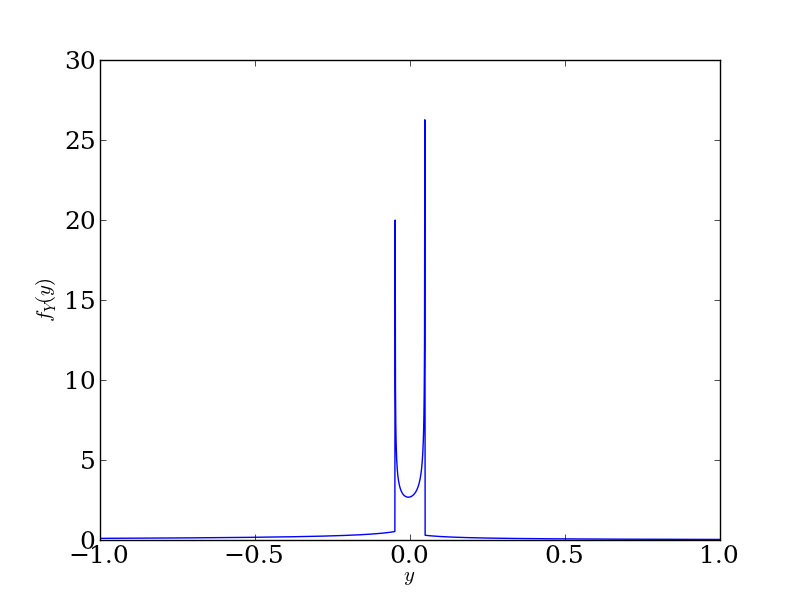

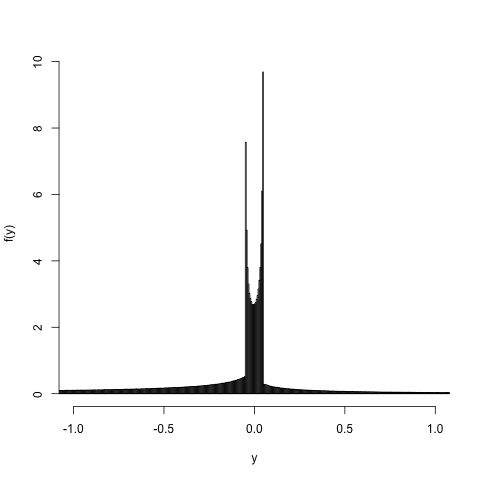

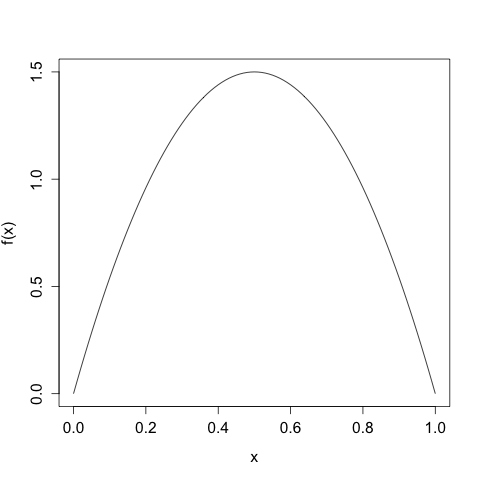

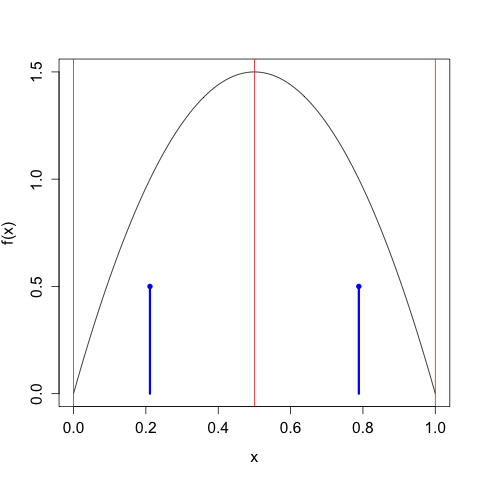

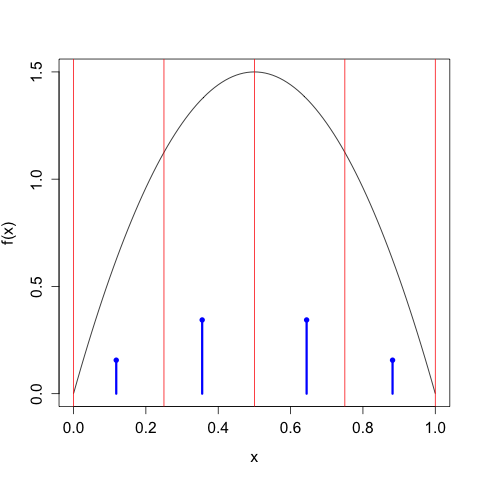

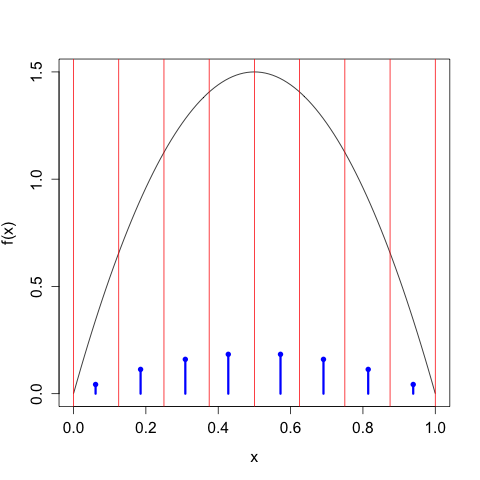

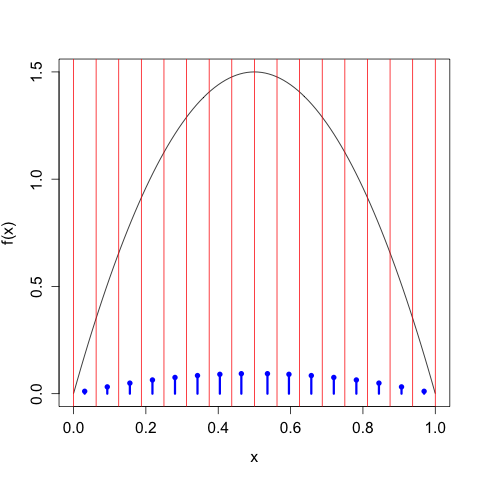

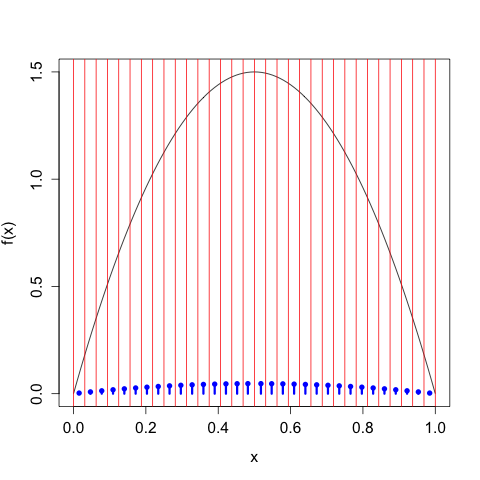

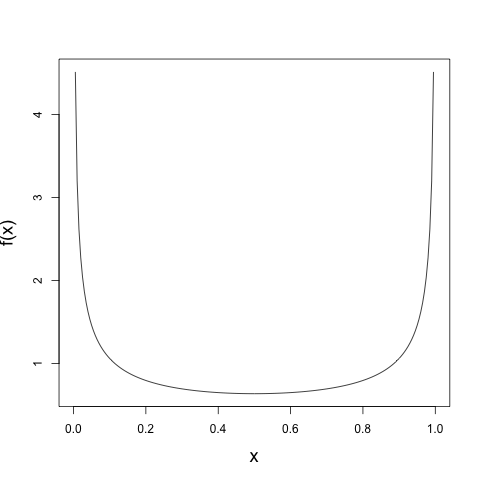

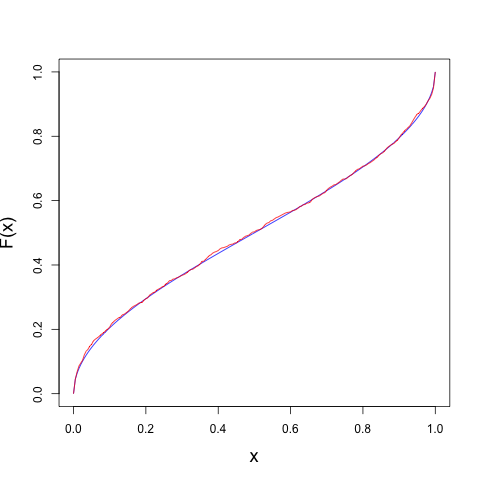

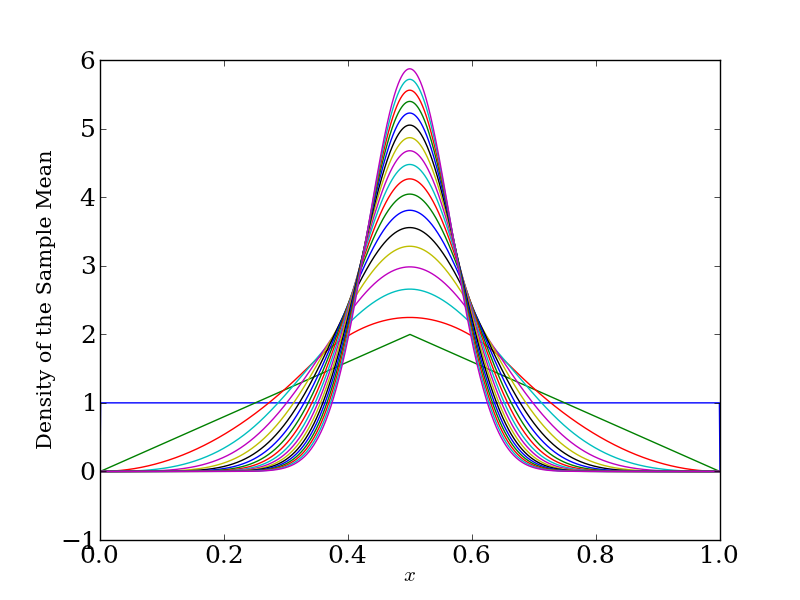

We've already seen one example of this previously. Namely, the differential entropy of a continuous random variable is not the limit of the Shannon entropy of the discretized random variable as we take the discretization to be finer and finer. In symbols, \[ H\left[X^{\Delta}\right] \nrightarrow h[X],\] but in fact \[ H\left[X^{\Delta}\right] + \log \Delta \to h[X],\] as we take \(\Delta\) smaller and smaller. This has consequences for people trying to compute 'entropy' (the type never named) from data. If you have a continuous signal, and you make an estimate of \(H\left[X^{\Delta}\right]\) by binning your data more and more finely to compute a histogram estimator \(\hat{f}(x)\) of the true density \(f(x)\), you should quickly notice that you're not converging on anything. In fact, as you bin more and more finely, in the limit of infinite data, you'll diverge to infinity. This is precisely because of the convergence result we saw above. If we are interested in the differential entropy \(h[X]\), we can estimate it directly by taking an estimator of the density \(f\), say, using a kernel density estimator, and computing the integral of interest, namely \[ \hat{h}[X] = \int_{\mathbb{R}} \hat{f}(x) \log \hat{f}(x) \, dx.\]

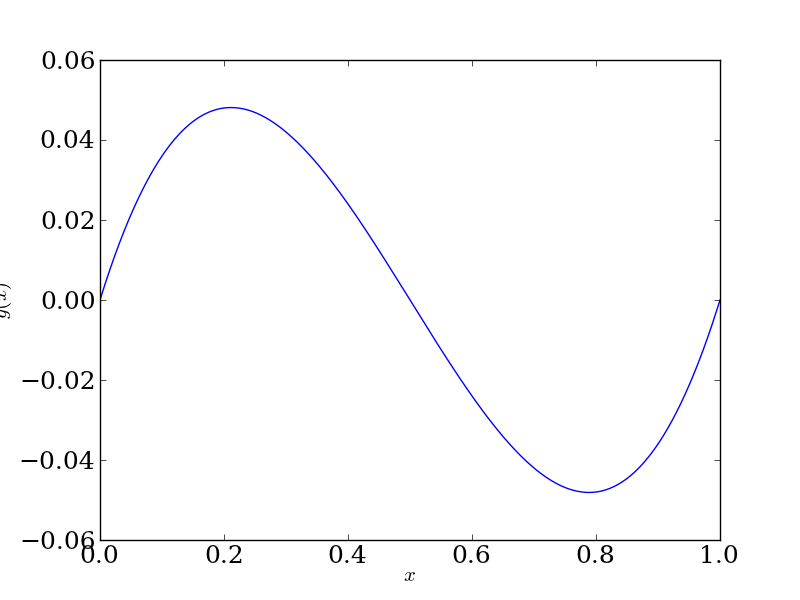

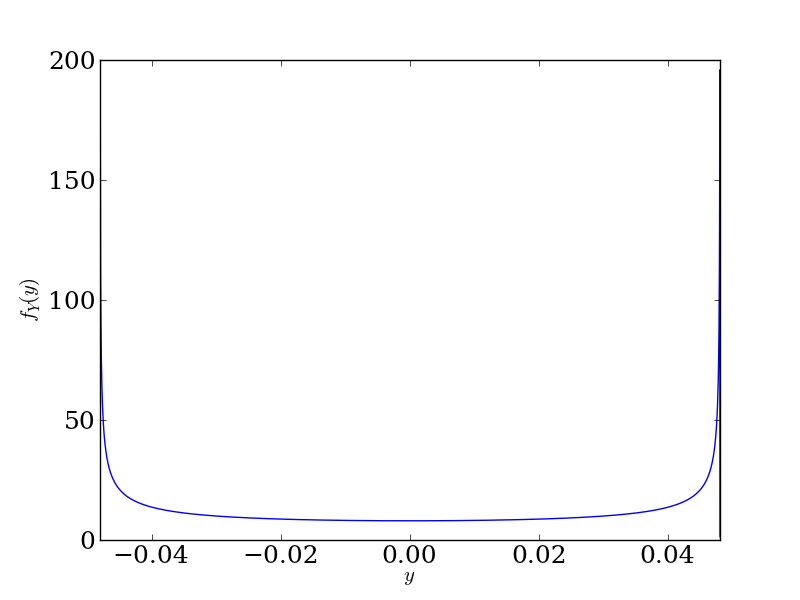

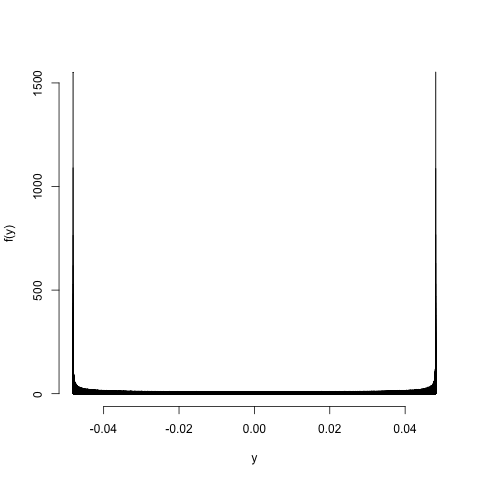

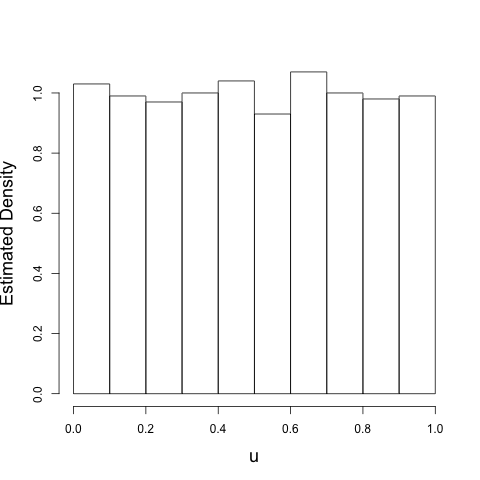

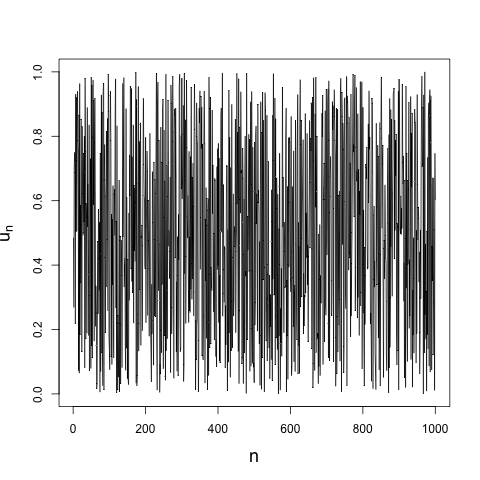

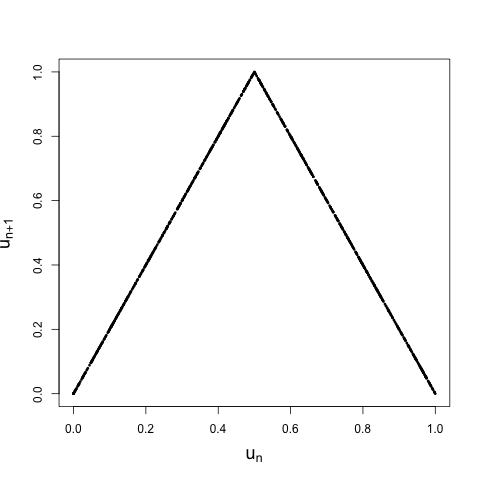

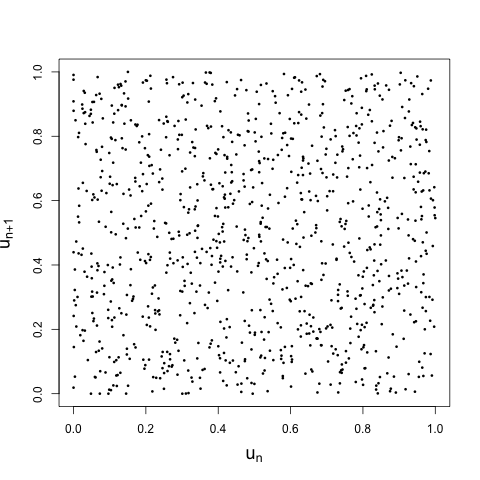

But usually we're not interested in the differential entropy. Instead, we might have two processes that we observe, \(X\) and \(Y\), and be curious about any structure between them. In this case, we might want to compute the mutual information between \(X\) and \(Y\), namely \[ I[X; Y] = \iint_{\mathbb{R}^{2}} f(x, y) \log \frac{f(x,y)}{f(x) f(y)} \, dA,\] where \(f(x,y)\) is the joint density of \((X,Y)\), and \(f(x)\) and \(f(y)\) are the marginal densities of \(X\) and \(Y\), respectively. This is a useful quantity, since it is exactly zero if and only if \(X\) and \(Y\) are independent. In the wild, we don't have the joint or marginal densities available to us. Instead, we observe a sequence \((X_{1}, Y_{1}), (X_{2}, Y_{2}), \ldots, (X_{n}, Y_{n})\), and want to estimate the mutual information. If this sequence is independent and identically distributed, the estimation process is straightforward enough. We can estimate the joint entropy \(f(x,y)\) directly from the data, giving us \(\hat{f}(x,y)\), and then compute the integral definition of mutual information. (We get the marginals 'for free' by integrating out the appropriate variable.) We would then have \[ \hat{I}[X; Y] = \iint_{\mathbb{R}^{2}} \hat{f}(x, y) \log \frac{\hat{f}(x,y)}{\hat{f}(x) \hat{f}(y)} \, dA,\] If we don't want to bother with things like kernel density estimators, we can again fall back on our histogram estimator. But unlike before, the histogram estimator gives us the right answer in the limit of smaller and smaller bin sizes. To see why, remember that \[ I[X; Y] = H[X] - H[X | Y]\] and thus \[ I\left[X^{\Delta}; Y^{\Delta}\right] = H\left[X^{\Delta}\right] - H\left[X^{\Delta} | Y^{\Delta}\right].\] But we've seen a way to write \(H\left[X^{\Delta}\right]\) as a Riemann sum. Similarly, we can write \(H\left[X^{\Delta} | Y^{\Delta}\right] = H\left[X^{\Delta}, Y^{\Delta}\right] - H\left[Y^{\Delta}\right]\) as a (double) Riemann sum, pulling the same mean value theorem trick as before. After we've done this, we'll see that \[ H\left[X^{\Delta}\right] "\to" h[X] - \log \Delta\] and \[ H\left[X^{\Delta} | Y^{\Delta}\right] "\to" h[X | Y] - \log \Delta,\] giving us that overall \[ I\left[X^{\Delta}; Y^{\Delta}\right] = H\left[X^{\Delta}\right] - H\left[X^{\Delta} | Y^{\Delta}\right] \to h[X] - h[X | Y] = I[X; Y].\]

In other words, researchers who blindly use histogram-based mutual information 'get lucky,' since their method turns out to work, in the limit of smaller and smaller bins and more and more data, at computing estimating mutual information. But as we saw with the histogram-based entropy estimator, this did not have to be the case.